In the realms of software engineering and artificial intelligence (AI), achieving both efficiency and correctness in code is paramount. While current language models have made significant strides in generating functionally correct programs, they often struggle with runtime and memory utilization optimization. This inefficiency can be particularly detrimental for large-scale applications where performance is crucial. Consequently, there is a growing demand to develop strategies that improve code efficiency without compromising its correctness.

The Challenges in Code Optimization

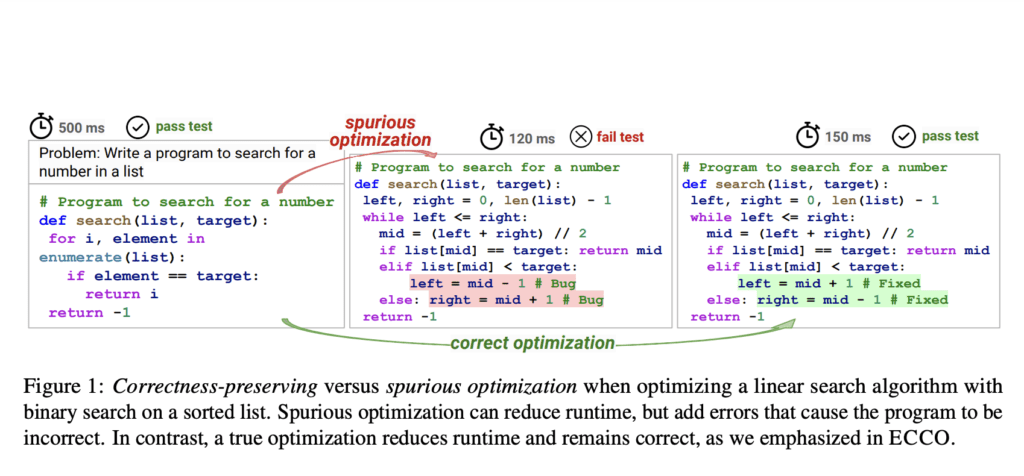

To create effective algorithms and tools, it’s essential to produce functionally correct code that operates efficiently, utilizing minimal computational resources. However, optimizing code efficiency without introducing errors is a persistent challenge. While existing techniques such as in-context learning, iterative refinement, and fine-tuning based on execution data hold promise, they often fail to preserve the functional correctness of the generated code. These approaches, though valuable, may lead to optimizations that introduce errors or reduce the performance of certain operations.

Introducing the ECCO Benchmark

Researchers from the Language Technologies Institute at Carnegie Mellon University have developed the ECCO benchmark, which is designed to address these challenges by evaluating program efficiency while preserving correctness. ECCO operates on two paradigms: pure language-based code generation and history-based code modification. This benchmark aims to assess the efficiency of code generated by language models, providing a platform for continued research and development in this area.

To ensure consistency in evaluation, ECCO employs JUDGE0, a cloud-based execution engine, which guarantees reproducible execution outputs across diverse hardware environments. Supporting more than 60 programming languages, ECCO offers a versatile tool for evaluating the performance of various language models.

ECCO’s Setup and Capabilities

The ECCO benchmark utilizes a comprehensive setup, integrating Amazon EC2 instances to execute code in a controlled environment. This ensures reliable and accurate results while maintaining the consistency of execution across different platforms. The benchmark includes a dataset of over 50,000 Python answer pairs from 1,300 competitive programming problems, sourced from the IBM CodeNet dataset and the AlphaCode project. This rich dataset provides diverse test cases for evaluating language models’ ability to optimize code without sacrificing correctness.

Experimental Insights and Findings

In their experiments, the research team evaluated a range of strategies to enhance program efficiency while maintaining functional correctness. The key strategies tested include in-context learning, iterative refinement, and fine-tuning. The study highlighted that incorporating execution information is essential for maintaining correctness, while pure language feedback significantly boosts efficiency. For instance:

- History-based modification yielded substantial improvements in both program speedup and memory reduction.

- Approaches utilizing pure language feedback demonstrated the best speedup across models.

- Iterative refinement, especially when combined with execution feedback, resulted in the highest correctness rates, emphasizing the importance of execution outputs in guiding optimization efforts.

Despite these advancements, the research found that optimizing for efficiency often came at the cost of correctness. For example, DeepseekCoder achieved a 66.6% correctness rate in history-based modification but sacrificed some correctness in the process. This illustrates the complex trade-offs between correctness and efficiency that must be navigated in code optimization.

ECCO as a Future Testbed for Research

The ECCO benchmark has proven to be an invaluable tool for evaluating code optimization strategies. It provides a comprehensive testbed for understanding the delicate balance between code efficiency and correctness. By offering insights into the strengths and weaknesses of current methods, ECCO sets the stage for developing more robust strategies that can address these trade-offs effectively.

Conclusion

The research surrounding the ECCO benchmark underscores the ongoing efforts to improve code efficiency without sacrificing correctness. With its robust setup and extensive dataset, ECCO provides a critical foundation for future research into language models and code generation. As the demand for efficient, high-performing software grows, ECCO will play an essential role in driving innovations that balance performance with accuracy, paving the way for advancements in both AI and software engineering.