In pc science, code effectivity and correctness are paramount. Software program engineering and synthetic intelligence closely depend on creating algorithms and instruments that optimize program efficiency whereas making certain they perform appropriately. This entails creating functionally correct code and making certain it runs effectively, utilizing minimal computational assets.

A key concern in producing environment friendly code is that whereas present language fashions can produce functionally right packages, they usually want extra runtime and reminiscence utilization optimization. This inefficiency will be detrimental, particularly in large-scale purposes the place efficiency is essential. The power to generate right and environment friendly code stays an elusive purpose. Researchers purpose to deal with this problem by discovering strategies that improve code effectivity with out compromising its correctness.

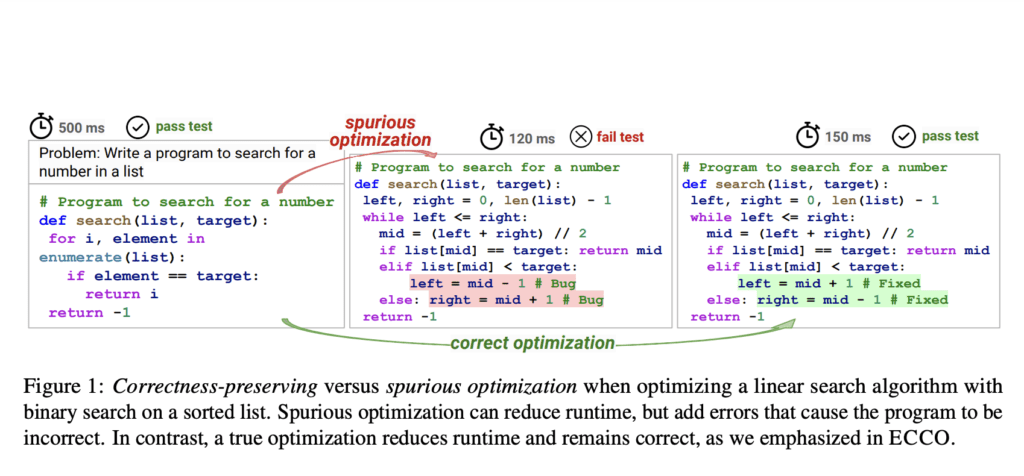

Established approaches for optimizing program effectivity embrace in-context studying, iterative refinement, and fine-tuning based mostly on execution knowledge. In-context studying entails offering fashions with examples and context to information the technology of optimized code. Iterative refinement focuses on progressively bettering code via repeated evaluations and changes. Then again, fine-tuning entails coaching fashions on particular datasets to boost their efficiency. Whereas these strategies present promise, they usually battle to take care of the practical correctness of the code, resulting in optimizations that may introduce errors.

Researchers from the Language Applied sciences Institute at Carnegie Mellon College launched ECCO, a benchmark designed to guage program effectivity whereas preserving correctness. ECCO helps two paradigms: pure language-based code technology and history-based code modifying. This benchmark goals to evaluate the effectivity of code generated by language fashions and supply a dependable platform for future analysis. Utilizing a cloud-based execution engine referred to as JUDGE0, ECCO ensures secure and reproducible execution outputs, no matter native {hardware} variations. This setup helps over 60 programming languages, making it a flexible software for evaluating code effectivity.

The ECCO benchmark entails a complete setup utilizing the cloud-hosted code execution engine JUDGE0, which gives constant execution outputs. ECCO evaluates code on execution correctness, runtime effectivity, and reminiscence effectivity. The benchmark consists of over 50,000 Python answer pairs from 1,300 aggressive programming issues, providing a strong dataset for assessing language fashions’ efficiency. These issues had been collected from the IBM CodeNet dataset and the AlphaCode mission, making certain a various and in depth assortment of check circumstances. ECCO’s analysis setup makes use of Amazon EC2 situations to execute code in a managed setting, offering correct and dependable outcomes.

Of their experiments, the researchers explored numerous top-performing code technology approaches to enhance program effectivity whereas sustaining practical correctness. They evaluated three major lessons of strategies: in-context studying, iterative refinement, and fine-tuning. The research discovered that incorporating execution info helps keep practical correctness, whereas pure language suggestions considerably enhances effectivity. As an illustration, history-based modifying confirmed substantial enhancements in program speedup and reminiscence discount, with strategies involving pure language suggestions attaining the very best speedup throughout fashions. Iterative refinement, notably with execution suggestions, constantly yielded the very best correctness charges, demonstrating the significance of execution outputs in guiding optimization.

The ECCO benchmark demonstrated that solely current strategies may enhance effectivity with some loss in correctness. For instance, fashions like StarCoder2 and DeepseekCoder confirmed important variations in efficiency throughout completely different analysis metrics. Whereas DeepseekCoder achieved a cross price of 66.6% in history-based modifying, it compromised correctness, highlighting the advanced trade-offs between correctness and effectivity. These findings underscore the necessity for extra sturdy strategies to deal with these trade-offs successfully. ECCO is a complete testbed for future analysis, selling developments in correctness-preserving code optimization.

In conclusion, the analysis addresses the essential concern of producing environment friendly and proper code. By introducing the ECCO benchmark, the analysis crew supplied a invaluable software for evaluating and bettering the efficiency of language fashions in code technology. ECCO’s complete analysis setup and in depth dataset supply a strong basis for future efforts to develop strategies that improve code effectivity with out sacrificing correctness.