Pure Language Processing (NLP), regardless of its progress, faces the persistent problem of hallucination, the place fashions generate incorrect or nonsensical data. Researchers have launched Retrieval-Augmented Technology (RAG) programs to mitigate this problem by incorporating exterior data retrieval to reinforce the accuracy of generated responses.

The issue, nevertheless, is the reliability and effectiveness of RAG programs in offering correct responses throughout completely different domains. Present benchmarks primarily deal with normal data however want to enhance in evaluating the efficiency of RAG fashions in specialised fields like finance, healthcare, and authorized sectors. This limitation arises from the issue in curating high-quality datasets that may comprehensively check the fashions’ capacity to deal with domain-specific data.

Present strategies for evaluating RAG programs embody established NLP metrics reminiscent of F1, BLEU, ROUGE-L, and EM for reply technology and Hit Fee, MRR, and NDCG for retrieval evaluation. Newer approaches use LLM-generated information to judge contextual relevance, faithfulness, and informativeness. Nonetheless, these metrics usually lack the nuance required for assessing the generative capabilities of RAG programs in vertical domains. Consequently, a extra strong analysis framework is important to deal with these shortcomings and supply an in depth evaluation of RAG efficiency in specialised areas.

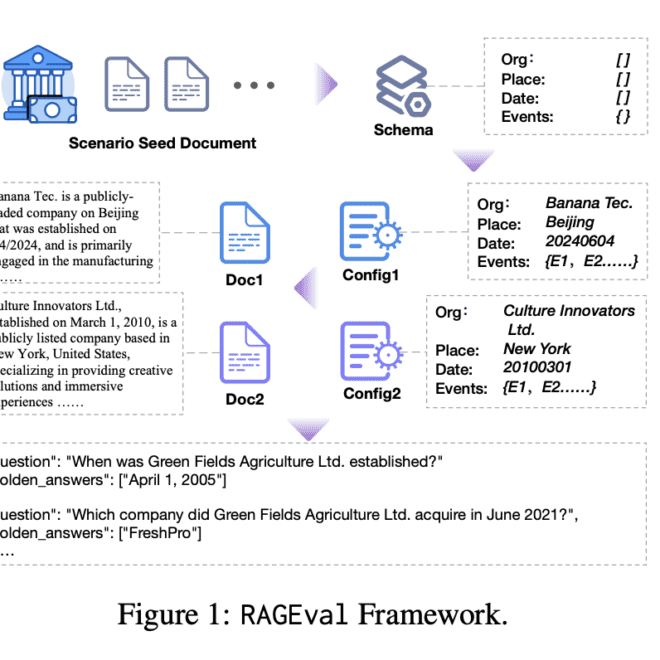

Researchers from Tsinghua College, Beijing Regular College, College of Chinese language Academy of Sciences, and Northeastern College launched the RAGEval framework to deal with these challenges. This framework routinely generates analysis datasets tailor-made to particular situations in varied vertical domains. The method begins by summarizing a schema from seed paperwork, producing various paperwork and developing question-answering pairs based mostly on these configurations. The framework then evaluates the mannequin responses utilizing novel metrics specializing in factual accuracy.

The proposed technique, RAGEval, employs a “schema-configuration-document-QAR-keypoint” pipeline to make sure the robustness and reliability of the analysis course of. This includes producing a schema that encapsulates important domain-specific data, creating configurations from this schema, and producing various paperwork. These paperwork are then used to generate questions and reference solutions, forming QAR triples evaluated for completeness, hallucination, and irrelevance. This complete strategy ensures that the analysis datasets are wealthy in factual data and logical coherence.

A hybrid strategy is used to generate these configurations, combining rule-based and LLM-based strategies to assign values to the schema parts. Rule-based strategies guarantee excessive accuracy and consistency, significantly for structured information, whereas LLMs are used to generate extra complicated or various content material. This technique produces a variety of high-quality, various configurations, making certain the generated paperwork are correct and contextually related.

Experimental outcomes demonstrated that the RAGEval framework is extremely efficient in producing correct, protected, and wealthy content material throughout varied domains. The human analysis outcomes highlighted the robustness of this technique, exhibiting that the generated paperwork had been clear, particular, and carefully resembled real-world paperwork. Furthermore, the validation of automated analysis metrics confirmed a excessive diploma of alignment with human judgment, confirming the reliability of those metrics in reflecting mannequin efficiency.

GPT-4o carried out higher total, attaining the best Completeness scores of 0.5187 for Chinese language and 0.6845 for English. Nonetheless, the hole with top-performing open-source fashions, reminiscent of Qwen1.5-14B-chat and Llama3-8B-Instruct, was comparatively small. Qwen1.5-14B-chat achieved a Completeness rating of 0.4926 in Chinese language, whereas Llama3-8B-Instruct scored 0.6524 in English. These outcomes recommend that with additional developments, open-source fashions have important potential to shut the efficiency hole with proprietary fashions.

In conclusion, the RAGEval framework provides a sturdy answer for evaluating RAG programs, addressing the restrictions of current benchmarks by specializing in domain-specific factual accuracy. This strategy enhances the reliability of RAG fashions in varied industries and paves the best way for future enhancements in proprietary and open-source fashions. For finest outcomes, researchers and builders are inspired to leverage frameworks like RAGEval to make sure their fashions meet the particular wants of their software domains.