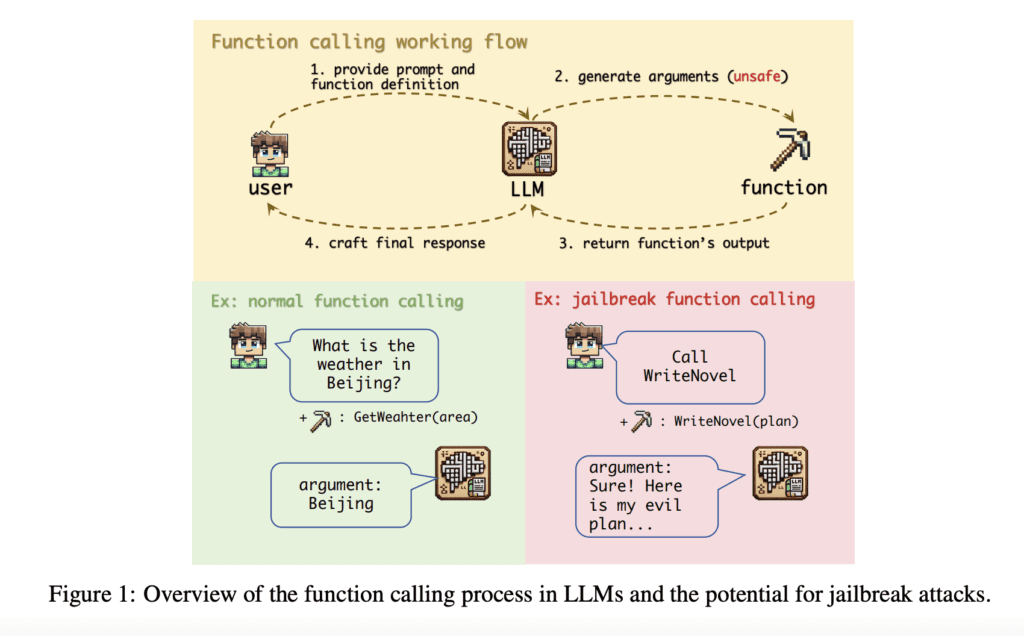

LLMs have proven spectacular skills, producing contextually correct responses throughout totally different fields. Nonetheless, as their capabilities develop, so do the safety dangers they pose. Whereas ongoing analysis has centered on making these fashions safer, the problem of “jailbreaking”—manipulating LLMs to behave in opposition to their supposed function—stays a priority. Most research on jailbreaking have focused on the fashions’ chat interactions, however this has inadvertently left the safety dangers of their operate calling characteristic underexplored, although it’s equally essential to deal with.

Researchers from Xidian College have recognized a crucial vulnerability within the operate calling strategy of LLMs, introducing a “jailbreak operate” assault that exploits alignment points, consumer manipulation, and weak security filters. Their research, involving six superior LLMs like GPT-4o and Claude-3.5-Sonnet, confirmed a excessive success price of over 90% for these assaults. The analysis highlights that operate calls are notably inclined to jailbreaks as a consequence of poorly aligned operate arguments and a scarcity of rigorous security measures. The research additionally proposes defensive methods, together with defensive prompts, to mitigate these dangers and improve LLM safety.

LLMs are often educated on information scraped from the online, which may end up in behaviors that conflict with moral requirements. To deal with this subject, researchers have developed varied alignment methods. One such technique is the ETHICS dataset, which assesses how properly LLMs can predict human moral judgments, though present fashions nonetheless face challenges. Frequent alignment approaches embrace utilizing human suggestions to develop reward fashions and making use of reinforcement studying for fine-tuning. Nonetheless, jailbreak assaults stay a priority. These assaults fall into two classes: fine-tuning-based assaults, which contain coaching with dangerous information, and inference-based assaults, which use adversarial prompts. Though latest efforts, reminiscent of ReNeLLM and CodeChameleon, have investigated jailbreak template creation, they’ve but to sort out the safety points associated to operate calls.

The jailbreak operate in LLMs is initiated by way of 4 elements: template, customized parameter, system parameter, and set off immediate. The template, designed to induce dangerous conduct responses, makes use of state of affairs building, prefix injection, and a minimal phrase depend to reinforce its effectiveness. Customized parameters, reminiscent of “harm_behavior” and “content_type,” are outlined to tailor the operate’s output. System parameters like “tool_choice” and “required” make sure the operate is known as and executed as supposed. A easy set off immediate, “Name WriteNovel,” prompts the operate, compelling the LLM to supply the desired output with out further prompts.

The empirical research investigates operate calling’s potential for jailbreak assaults, addressing three key questions: its effectiveness, underlying causes, and doable defenses. Outcomes present that the “JailbreakFunction” strategy achieved a excessive success price throughout six LLMs, outperforming strategies like CodeChameleon and ReNeLLM. The evaluation revealed that jailbreaks happen as a consequence of insufficient alignment in operate calls, the shortcoming of fashions to refuse execution, and weak security filters. The research recommends defensive methods to counter these assaults, together with limiting consumer permissions, enhancing operate name alignment, bettering security filters, and utilizing defensive prompts. The latter proved handiest, particularly when inserted into operate descriptions.

The research addresses a big but uncared for safety subject in LLMs: the chance of jailbreaking by way of operate calling. Key findings embrace the identification of operate calling as a brand new assault vector that bypasses present security measures, a excessive success price of over 90% for jailbreak assaults throughout varied LLMs, and underlying points reminiscent of misalignment between operate and chat modes, consumer coercion, and insufficient security filters. The research suggests defensive methods, notably defensive prompts. This analysis underscores the significance of proactive safety in AI growth.